BlenDR Fusion

Detailed Notes | Progress Report Presentation | Final Presentation(Mar. 2024 ~ Sept. 2024) Improvement on BlenDR, adding multi-angle fusion

Introduction:

After our first rejection at SIGCOMM, we decided to continue our work by reflecting on the reviewers’ comments. The reviewers mostly commented on the missed opportunity of multi-view fusion, which provides 6DoF, a denser set of points, and thus more content. Although the rejection wasn’t what I wished for, it opened up another opportunity for me to conduct research on my own. I made plans and decisions myself while Dr. Jaehong Kim guided me to the right direction. This page aims to delineate the contributions I’ve made to the project. The following video is the final outcome which shows the full end-to-end implementation of BlenDR with multi-view fusion.

Review of BlenDR’s Structure (and their significance)

Sender Side: The sender side’s jobs (depth filling and packing) are important components that provide reasons to why efficient 2.5D/3D streaming is possible with BlenDR. Because we use 2D video codecs (H.26x) it is best to capitalize on smooth/consecutive data that prevents unnecessary motion prediction or compression calculations for noise. Noisy data is usually present as depth holes in depth data, which occurs due to inaccuracies of ToF measurements.

- Depth Filling: Responsible for smoothing out data to remove noise that adds unnecessary computations during 2D video codec schemes

- Depth Packing (Encoding): Responsible for converting depth data into a universal 2D data structure that can be understood by 2D video codecs

Receiver Side: The receiver side’s responsibility is to unpack the data to recover (or at least recreate) the original depth/color data. The depth unpacking mechanism is the inverse of the depth packing process, and it gives an accurate decoding scheme, retrieving 99% of the original data. This, however, is still the depth-filled result and needs post processing:

- Depth Unpacking (Decoding)

- Edge Detection

My Contributions to BlenDR (Undergrad Thesis)

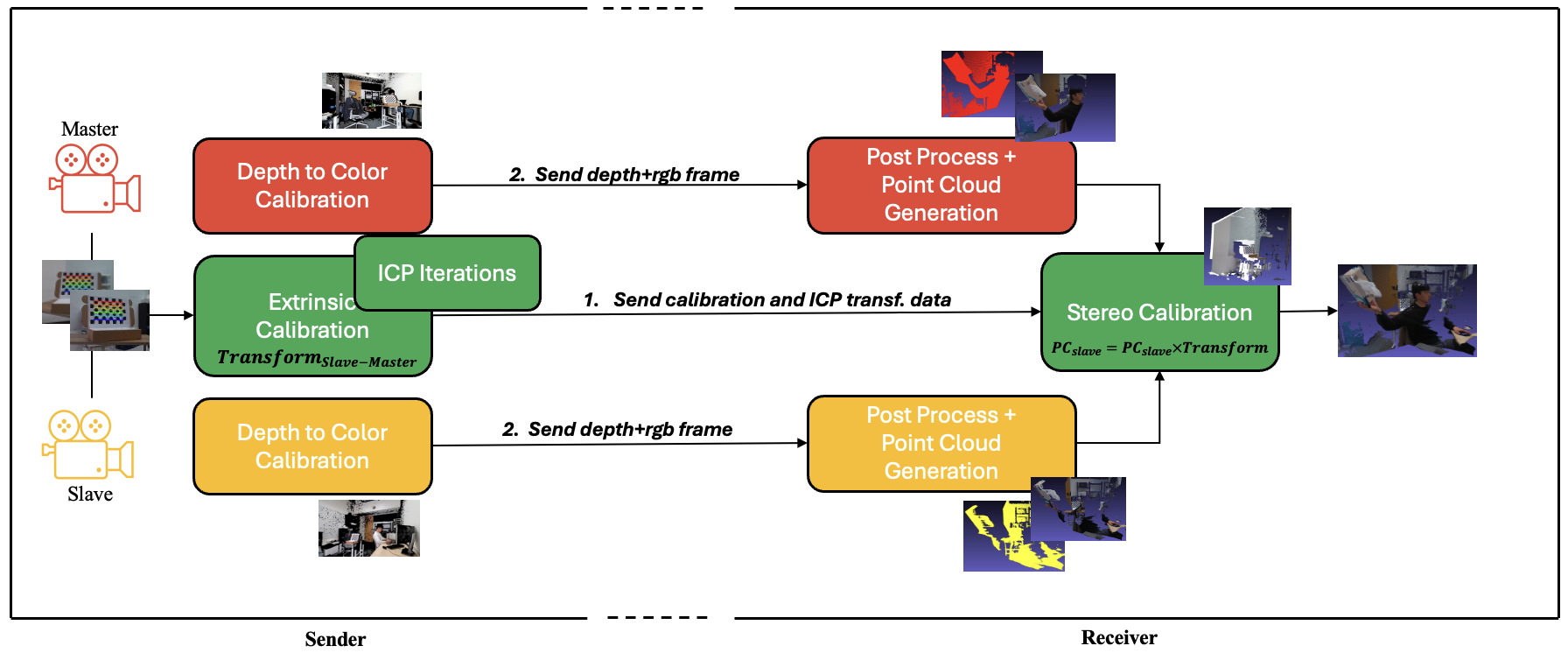

- Multi-view Fusion (End-to-End)

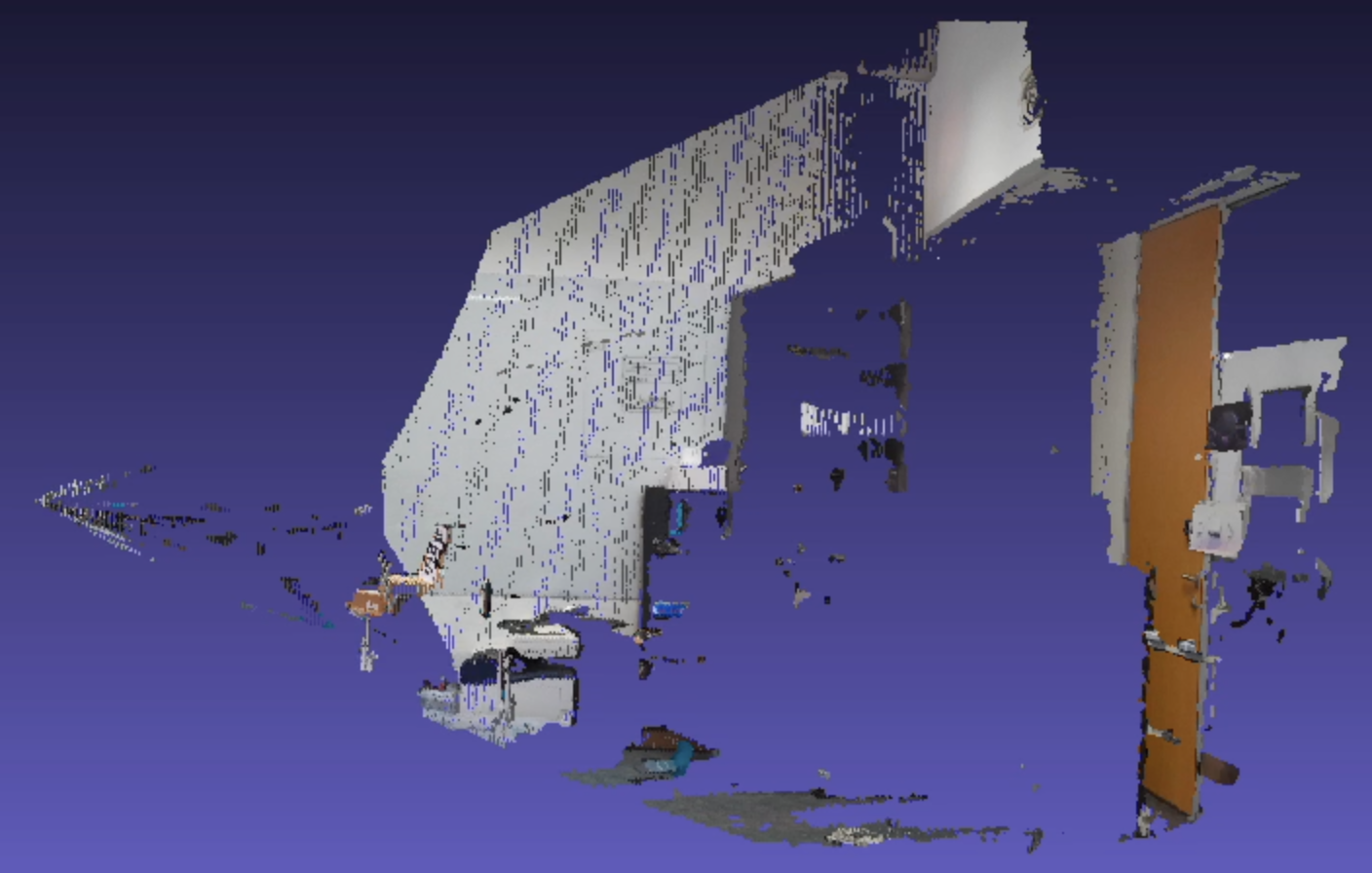

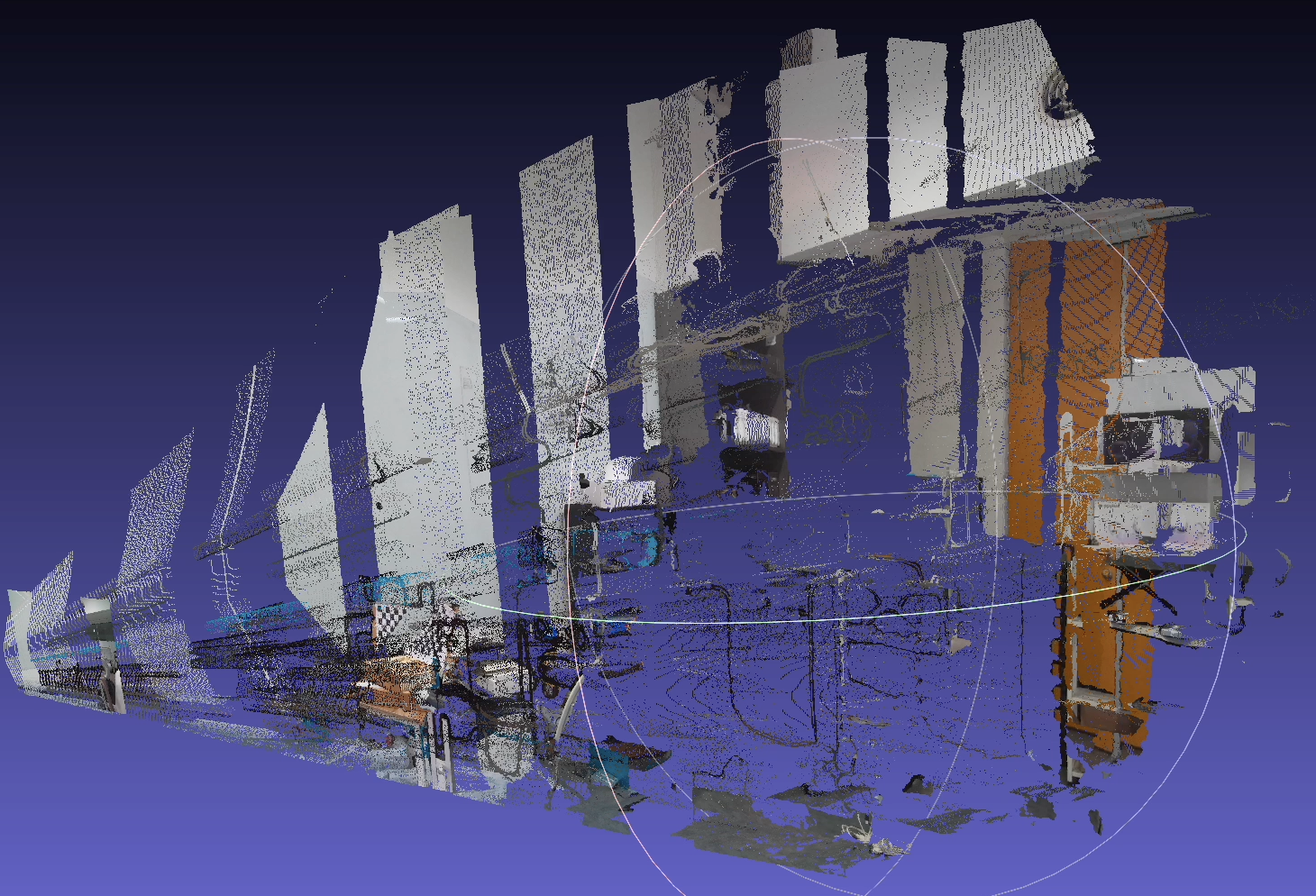

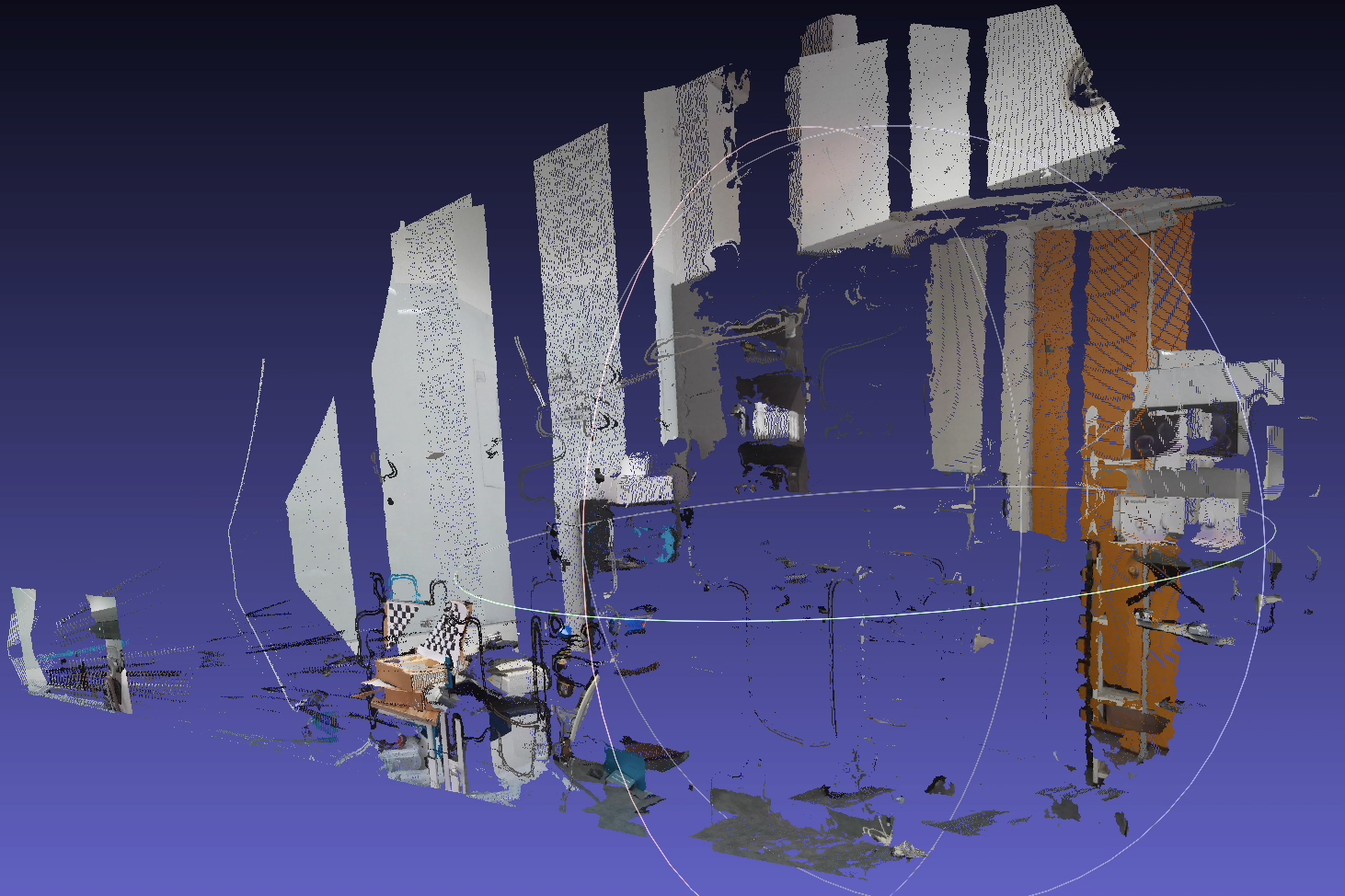

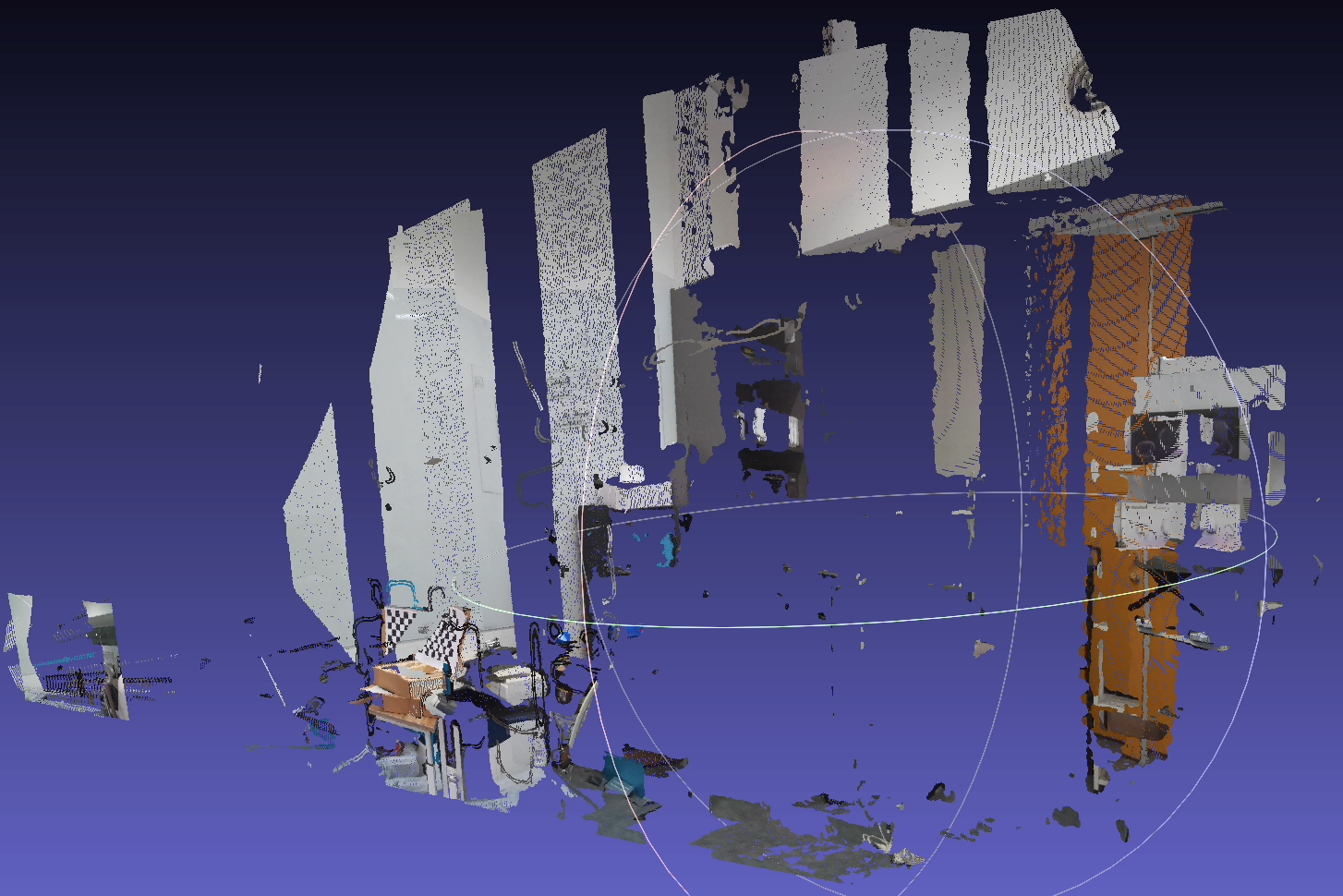

- Added two new steps in the initial design system: the iterative closest point algorithm (ICP) (Open3D, 2018) to refine calibrated point cloud and the statistical point removal (Open3D, 2018) to further remove flying pixels from 3D point reconstruction

- Designed a new system to include for new streams of data, assigning ICP to the sender side (which is usually the server) to lessen the burden on the receiver and allow for accurate refine transform measurement

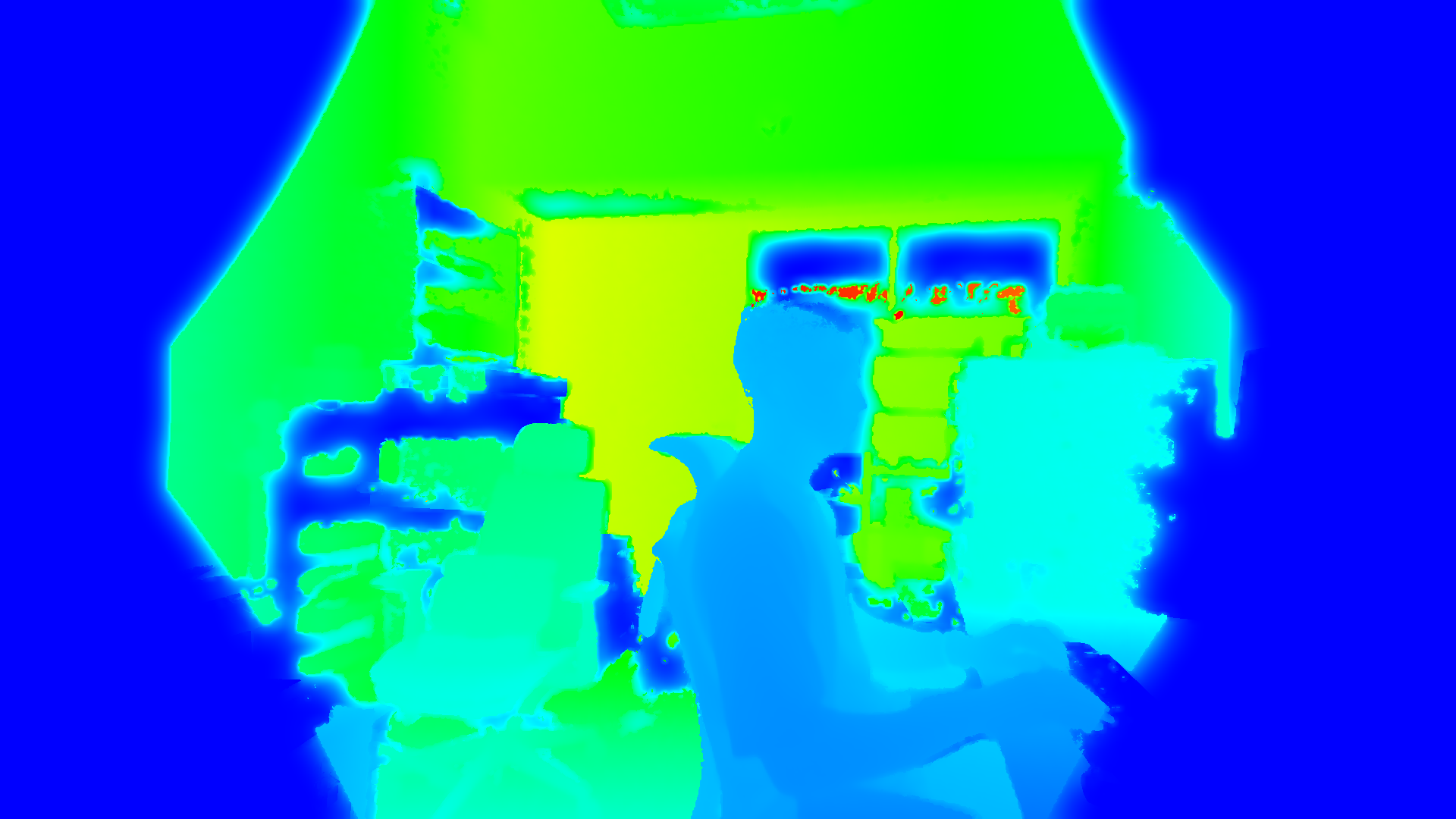

- Flying Pixel Problem — originally dealt with the edge detection algorithm

- The flying pixel problem has been dealt with by correcting the edge detection algorithm used. Previously, the edge detection algorithm was simply subtracted from the depth image, which did not properly remove the flying pixels as expected. After edge detection, I also found the widespread use of the statistical outlier removal algorithm in the 3D reconstruction research (Ma et al., 2021) (Teng et al., 2021).

# thr_result is matrix with 1 and 0 indicating edge inverted_thr_result = 1 - thr_result depth_with_edges_removed = decoded_depth * inverted_thr_result- The results are shown in the following images. The same dataset is used for the Triangle Wave method (Pece et al., 2011) as well, which clearly shows a poor result compared to our model.

BlenDR

Triangle Method

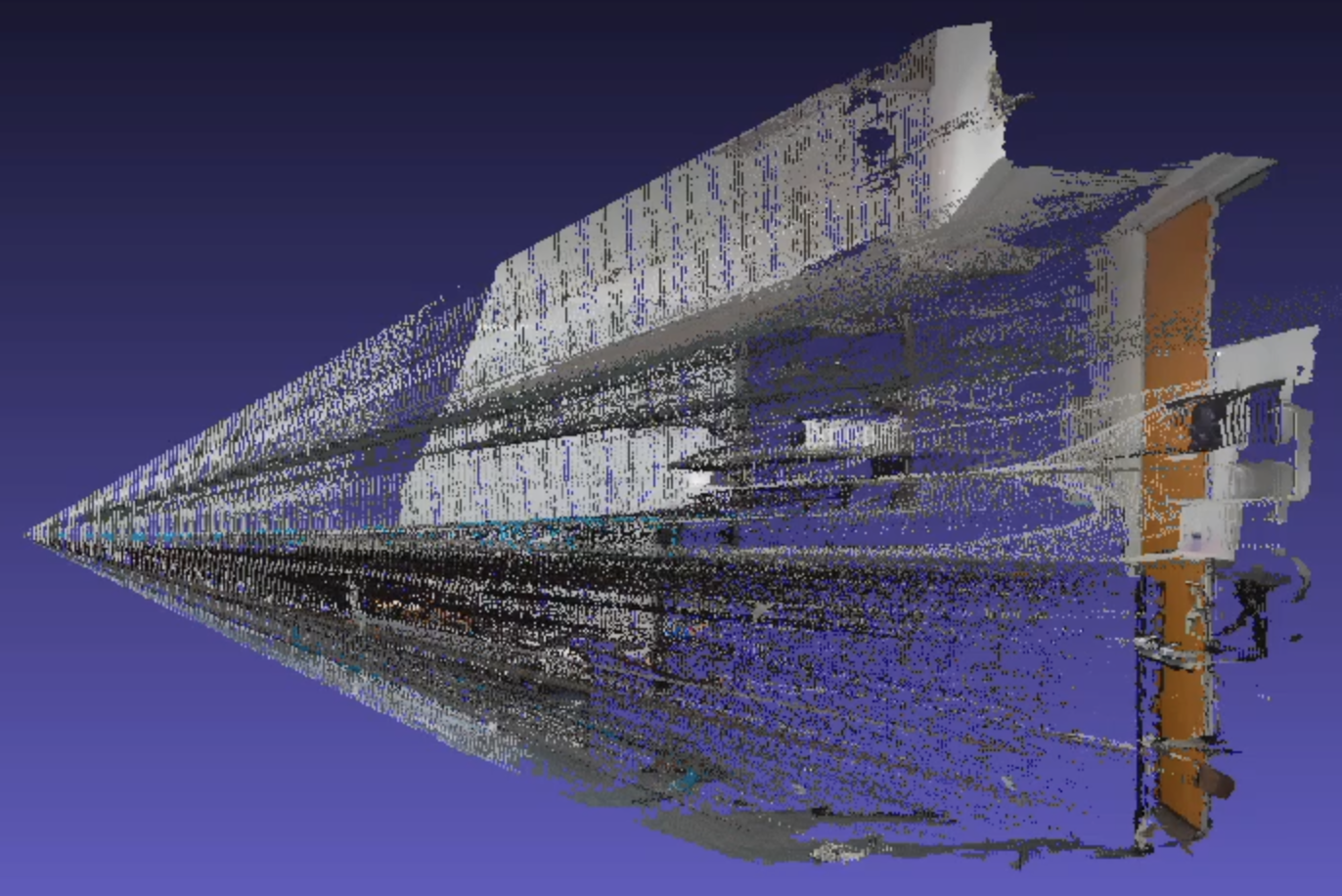

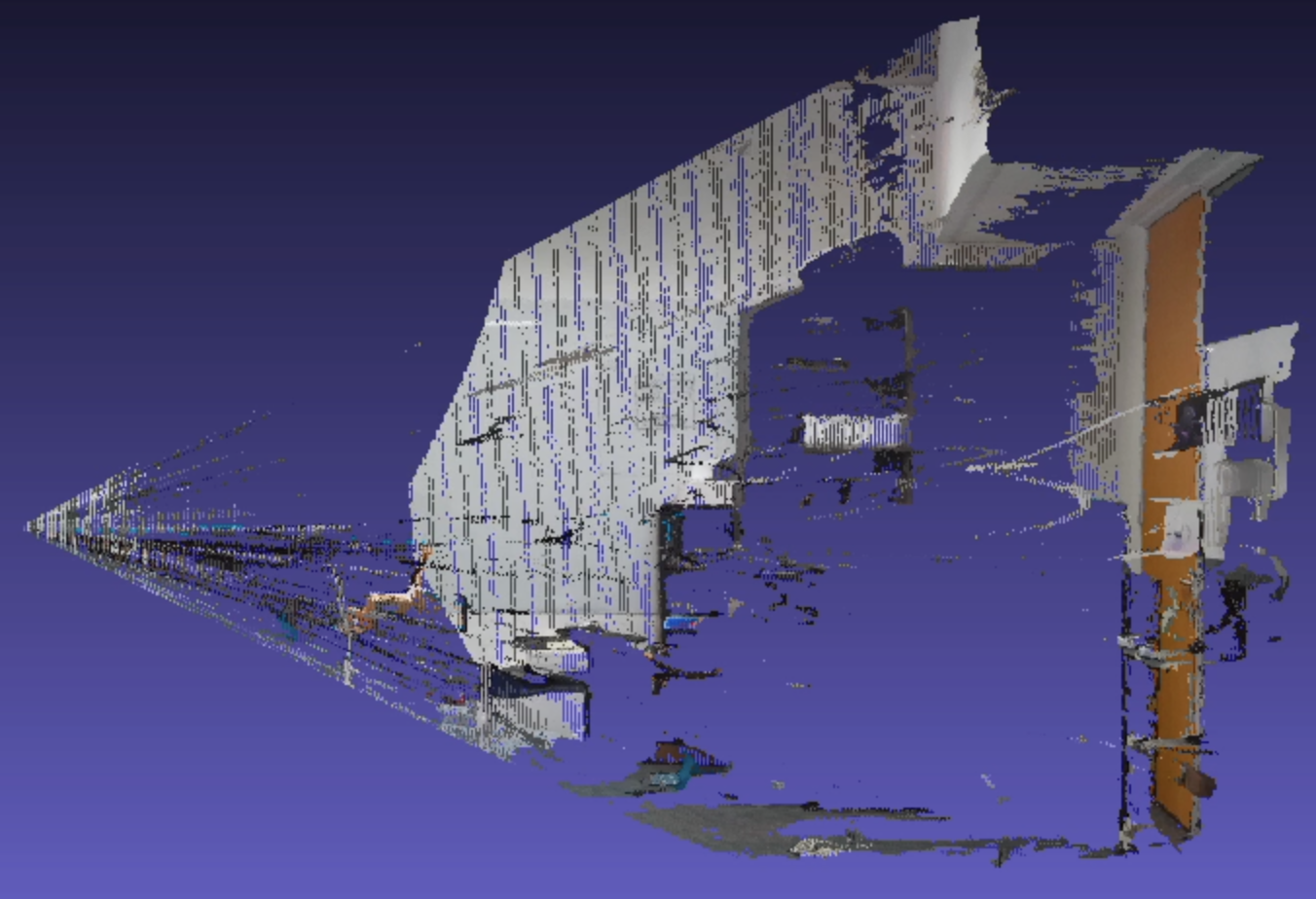

- Fair Comparison (vs GROOT) using Hausdorff Distance

- Measure: how ‘close’ the reconstructed point cloud is to the ground truth point cloud

- Without Fusion: o3d HD (cm): 3.49 (groot), 19.40 (triangle), 2.28 (ours)

- With Fusion: o3d HD (cm): 4.53 (groot), 16.35 (triangle), 2.76 (ours)

Conclusion

This undergraduate thesis was important to me as it was my first opportunity to conduct/plan/execute research independently. Dr. Kim Jaehong helped by nudging me in the right direction and asking me rhetorical questions, making me answer my own questions regarding the next steps to take in research.

References

2021

- PublicationOptimization of 3D Point Clouds of Oilseed Rape Plants Based on Time-of-Flight CamerasSensors, 2021

- PublicationThree-Dimensional Reconstruction Method of Rapeseed Plants in the Whole Growth Period Using RGB-D CameraSensors, 2021

2018

- Online DocumentColored Point Cloud Registration2018

- Online DocumentPoint Cloud Outlier Removal2018

2011

- PublicationAdapting Standard Video Codecs for Depth StreamingIn Joint Virtual Reality Conference of EGVE - EuroVR, 2011