Disaggregated Serving

References

Motivation - Disaggregated Serving

The pre-fill and decode stage of LLM inference have different characteristics: the pre-fill stage is compute-heavy and the decode stage is memory-bound. This is because the pre-fill stage is responsible for producing the first output token given a series of input tokens (which must be individually iterated through to calculate the contextual embeddings), while the decode stage relies on data movement from the KV-Cache to generate the next tokens relevant to the context.

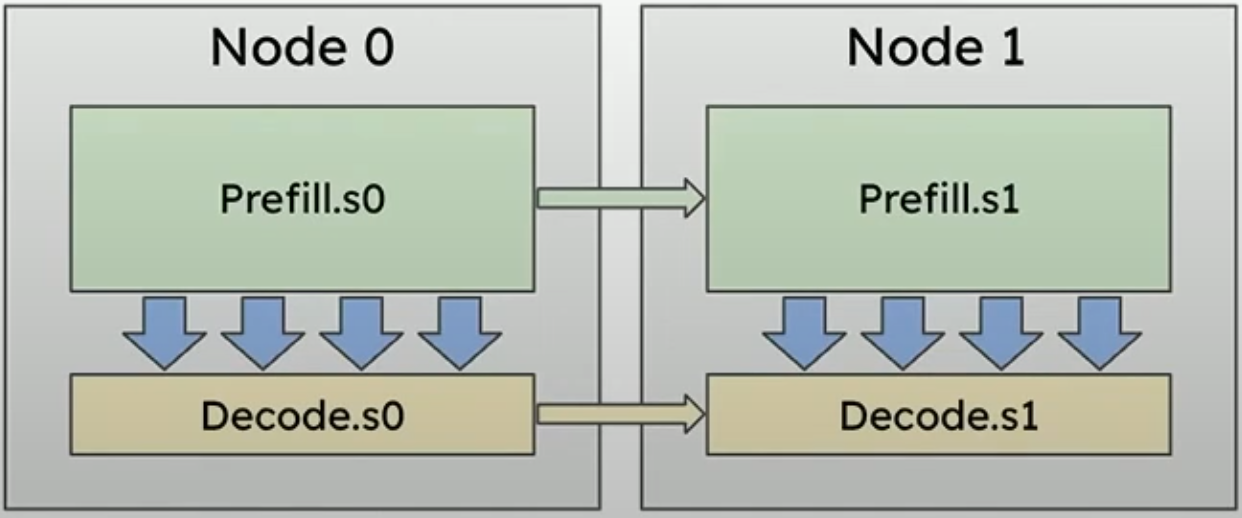

The different characteristics serve as the motivation to disaggregated serving: serving both pre-fill and decode stage in a single GPU would incur high opportunity costs due to the foregone benefits of using the idle resources caused by different resource loads. The goal of disaggregated serving is to allocate the pre-fill stage in a GPU and the decode stage in another GPU in a pipelined manner. Within these two sets of GPUs, one would be able to use different parallelization strategies that are more optimal for each resource usage patterns. For instance, the article mentions the use of low tensor parallelism (larger tensor sizes per GPU) for the pre-fill stage to maximize computation and minimize communication, and the use of high tensor parallelism for the decode stage to maximize memory bandwidth.

NVIDIA Dynamo’s Motivation: DistServe

Analysis of Prefill Instance

The prefill phase is compute-intensive as the KV vectors for all of the tokens must be calculated; a 13B parameter model processing a single 512 token prompt can fully saturate a A100 GPU.

- Saturation Point: When a GPU becomes compute-bound, adding more reqeusts to a batch does not improve efficiency and it adds more processing time for the batch, causing delays to all requests within the batch.

- Proposed Strategy: Find critical input length ($L_m$) for a given LLM and GPU setup. Batching multiple requests together is only done when the total combined length of the prompts is below the saturation threshold ($L_m$).

- Prefill Batch sizes are usually kept small because input prompts are usually very long

intra-op parallelism is better at low request rates, while inter-op parallelism is more effective at high request rates

- Total latency dominates queueing delay when request rates are low. Intra-op parallelism directly lowers the execution time of each request

- At higher request rates, queue waiting for requests grows and queuing delay becomes dominant. Inter-op parallelism creates a pipeline of model layers which allows system to handle more requests simultaneously

Analysis of Decode Instance

Without disaggregation, our batch size is limited due to the competition co-located prefill and decode stages. It becomes harder to meet latency goals with larger batch sizes, leading to a trade-off between TTFT and TPOT.

- Experiments show that for stringent TPOT SLOs, intra-op parallelism is essential

- Beyond this, inter-op parallelism is preferable to enhance throughput linearly

Optimization

High Node-Affinity

KV-Cache transmission is negligible, so we can make optimizations on prefill and decode stage independently. Given prior knowledge of workload arrival process and input/output length distribution, we can compute SLO attainment from distribution of input workload.

Low Node-Affinity

The goal is to place the stage responsible for KV-cache transmission (send/recv) within the same node to leverage NVLINK Bandwidth.

NVIDIA Dynamo

The goal of NVIDIA Dynamo is to intelligently switch between disaggregated serving, traditional serving, or parallelization strategies depending on the application (workload), using the Dynamo Planner, Smart Router, Distributed KV-Cache Manager, and NIXL (NVIDIA Inference Transfer Library).

- Dynamo Planner: Use TTFT and ITL metrics to make informed decisions on whether to use disaggregation, or if additional GPUs are needed for either decode/pre-fill stage.

- Input: GPU Capacity Metrics … (not that much detail)

- Output: Resource usage plan

- Dynamo Smart Router: When a new user input comes, calculates the overlap between existing KV-caches to route user input to most suitable worker, minimizing KV-cache recomputation

- Use cases that would have high rate of KV-Cache recomputation: agentic workflows / system prompts / single user multiturn chatbot

- hashes input requests and store in Radix Tree

- allows tracking of KV locations in large scale distributed inference

- uses KV cache eviction/addition algorithms to keep most relevant blocks are retained

- Dynamo KV Cache Manager: adds more hierarchies of memory where top-most is GPU memory, followed by host (CPU) memory, SSDs, and shared network storage.

- can free up GPU resources

- can reuse/retain historical KV Cache to prevent recomputation

- NIXL: point-to-point communication library with API to move data asynchronously across different tiers of memory using same semantics (network/hardware agnostic)

- considering disaggregation, efficient transmission of KV Cache from pre-fill workers to decode workers is needed

- transfer over RoCE, Infiniband, NVLink, or Ethernet can be served using NIXL